Sign up on the website of CodaLab and complete your own information.

If you already have an account, please sign in.

This tutorial will walk you through the whole submission process. By following it step by step, you will get your results on the hidden test set, and, if you wish, have your results listed on the public leaderboard.

Note: If the CodaLab worksheet cannot work well or appears to be dead, you can directly email your code and checkpoints to me (cst_hanxu13@163.com).

Sign up on the website of CodaLab and complete your own information.

If you already have an account, please sign in.

Click My Dashboard

.

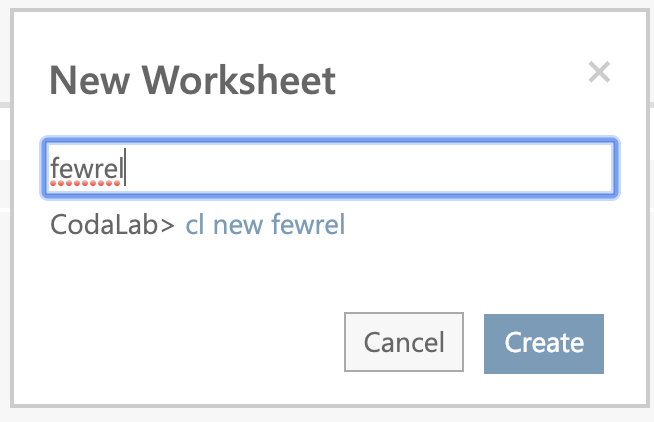

Click New Worksheet

to create your own worksheet.

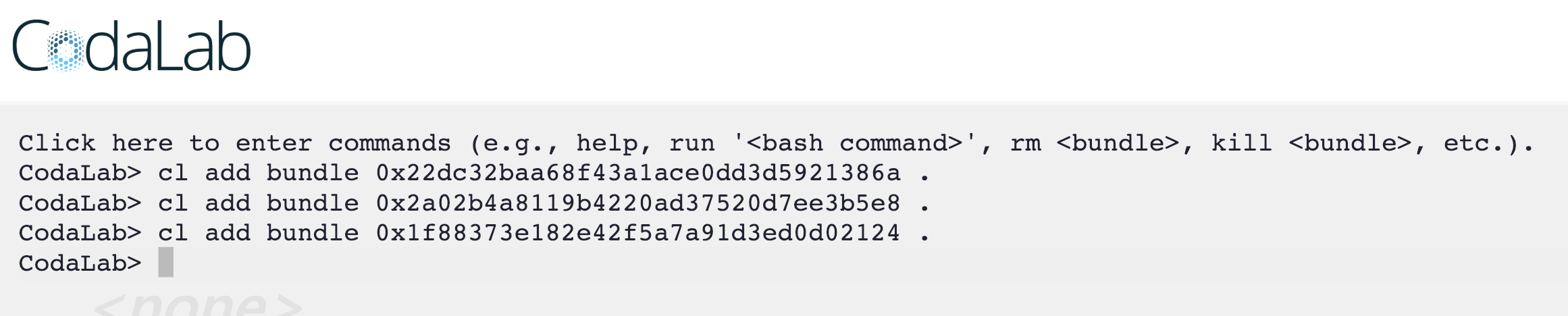

In the web interface terminal on the top of the page, type in the following command:

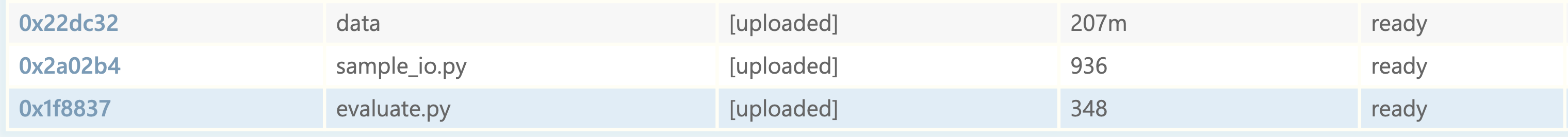

Add Train/val data:

cl add bundle 0x22dc32baa68f43a1ace0dd3d5921386a .Add a script to sample N-way K-shot dataset from a data division:

cl add bundle 0x2a02b4a8119b4220ad37520d7ee3b5e8 .Add the code for evaluating final results:

cl add bundle 0x1f88373e182e42f5a7a91d3ed0d02124 .

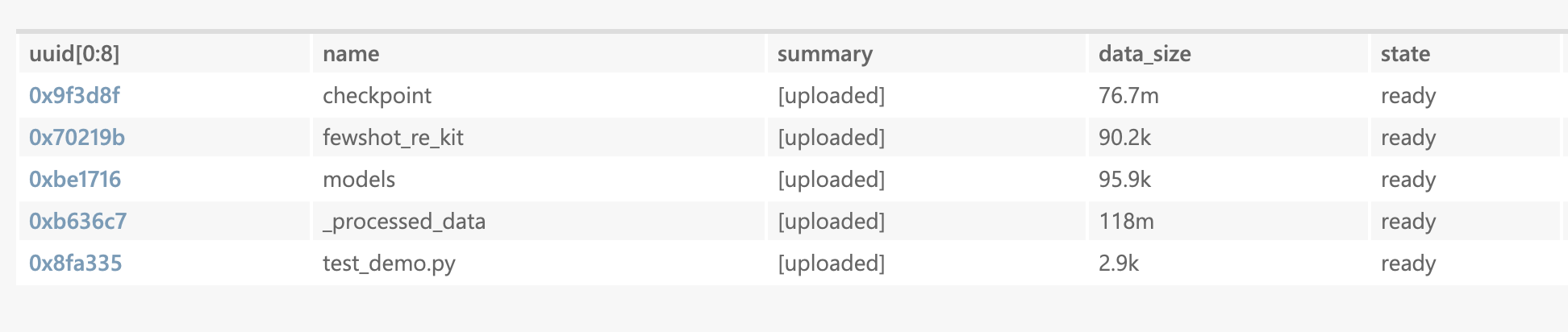

Click the Upload button in the top-right corner. to upload your own models and parameters. Then, your models and parameters will appear in the contents of the worksheet as several bundles, e.g., we upload our own demo models and parameters as follows,

Write and upload the evaluation script, e.g., we upload our demo evaluation script as follows,

The code of the script is

python sample_io.py data/val.json 100 5 5 12345 input > input.json

python sample_io.py data/val.json 100 5 5 12345 output > output.json

python test_demo.py input.json > predict.json

python evaluate.py predict.json output.jsonNote that: More details about data, evaluation scripts and demo examples can be found in our worksheet.

In the web interface terminal on the top of the page, type in the following command:

Sample instances from the dev set and evaluate the models:

cl run 'data:0x22dc32' 'sample_io.py:0x2a02b4' 'evaluate.py:0x1f8837' 'checkpoint:0x9f3d8f' 'fewshot_re_kit:0x70219b' '_processed_data:0xb636c7' 'models:0xbe1716' 'test_demo.py:0x8fa335' 'evaluate.sh:0xda5e83' 'bash evaluate.sh' --request-docker-image codalab/default-cpuNote that: for each file, its uuid should be detailed, e.g., 'data:0x22dc32'.

Get the results:

To preserve the integrity of test results, we do not release the test set to the public. Instead, we require you to upload your model onto CodaLab so that we can run it on the test set for you. Before evaluating your models on the test set, make sure you have completed evaluation of development set as detailed above. Thus we will assume you are already familiar with uploading files and running commands on CodaLab. Note that, we request you to use our provided data sampling file sample_io.py (FewRel 1.0 and FewRel 2.0 DA) / sample_io_nota.py (FewRel 2.0 NOTA) and evaluation file evaluate.py in this worksheet.

cl run 'data:0xbac6f7' 'sample_io.py:0x2a02b4' 'evaluate.py:0x1f8837' 'checkpoint:0x9f3d8f' 'fewshot_re_kit:0x70219b' '_processed_data:0xb636c7' 'models:0xbe1716' 'test_demo.py:0x8fa335' 'evaluate.sh:0xda5e83' 'bash evaluate.sh' --request-docker-image codalab/default-gpu --request-gpus 1 --request-disk 2g --request-time 2dShould you have any questions, please send an email to fewrel@googlegroups.com.